Nowadays, the benchmark for a film’s word-of-mouth success seems to lie in its Rotten Tomatoes score. A high rating can propel a film to new heights, while a low one can sink its chances before it even arrives in theaters. How has the “Tomatometer” changed the film industry, for better or for worse?

An Unbalanced Scale

On its surface, the Tomatometer seems like a fair way to judge a film’s merits. It gives you a quick reference point to indicate whether a film is worth your time. If 90% of critics or better liked the movie, it’s a safe bet that it is worth the time, whereas if less than 50% did, it probably has problems that warrant skipping it. This system unfortunately must polarize each critic’s review to a simple “positive” or “negative” score, which most of the time won’t matter, but when it comes to a more nuanced or divisive movie, where critics might have more to say than just a simple “Recommend” or otherwise, that can lead to incomplete or even outright incorrect results.

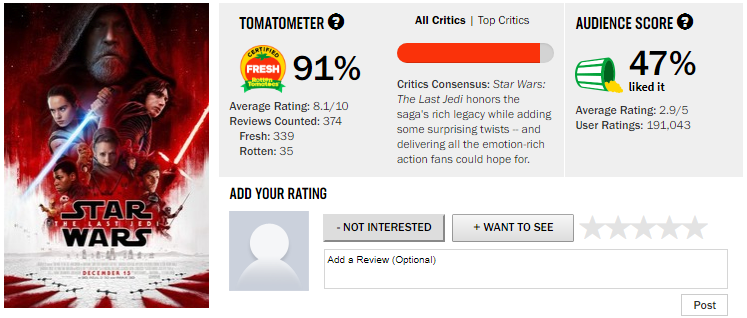

A perfect example of this comes with last year’s divisive Star Wars: the Last Jedi:

What gives with the massive divide between critics and audience scores? For one, you’ll notice that the “Average Rating” of the critics scores is considerably lower than the overall percentage. Each critic’s individual score is taken into consideration in addition to the binary Positive/Negative score, which can help account for nuance in a critic’s opinion of a film. And The Last Jedi is a film with a lot of nuance. If a critic has a lot to say about a complex film like TLJ and winds up giving it, say, a 7/10 for not quite living up to its ambitions, is that the same as an average low-brow blockbuster that gets a 7/10 for living up to the low expectations it had for itself?

Take this simple example. You ask two different pairs of people to rate a movie from 1-10. One pair each gives the film a 7, while the other two give it a 10 and a 4. In both cases the average score is 7, but the first pair indicates 100% approval while the other indicates 50% approval. Herein lies the problem. The first group’s overall score implies that the film is better than it is, because while they both found it above-average, their review would fall under a simple “Positive.” Meanwhile, the guy in the other group who liked it REALLY liked it, while the other REALLY didn’t, but their scores cancel out and wind up the same on average. The Tomatometer can’t differentiate between these two groups, and therefore can’t give an accurate picture of a film’s standings among critics without digging deeper.

Film discourse is complicated. Every film is different, and trying to grade them on a linear scale can sometimes feel like comparing apples to oranges. Sometimes I’ll trash one film but give it a C for effort, while for another I’ll praise it’s merits and still give it a C for being better than mediocre. It’s even more difficult if you then take these disparate scales and condense them into a single, compressed number, losing even more of the discussion in the process.

But surely the “average” issue isn’t the only factor at play here. What else might have led the latest Star Wars film to defy its high expectations with audiences?

Going With the Flow

I am not a conspiracy theorist. I say that because, while I don’t like to throw accusations lightly, I do have a feeling that not all critic reviews are completely accurate. When it comes to films with massive pre-release hype, sometimes critics will go easier on a film in order not to align themselves against it. I don’t for a second believe that there is collusion among the studios and the critics (just reading the reviews tends to negate that theory pretty easily), but I do think there is a bit of a stigma implied if you become the black sheep going against the grain for a film with a massive following.

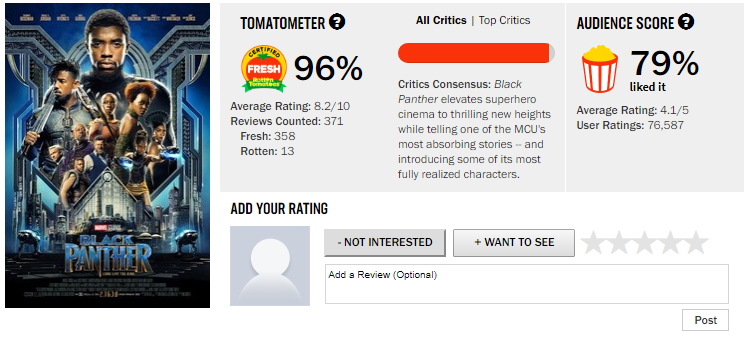

Take a look at Black Panther, for instance:

Several things fascinate me with this film. Some controversy was generated during the initial review season of the film because mega-fans were hunting down critics who gave rare negative reviews and calling them out on social media. Clearly abnormal behavior, but at the same time, every once in a while a film comes out with massive passion behind it (say, The Last Jedi) and early critics are pressured into maintaining the status quo to avoid backlash. Even those critics who didn’t care much for Star Wars or Black Panther, and perhaps those who don’t enjoy their respective genres at all, were careful to stay just positive enough in their review to remain ambiguous about the film’s merit. The Tomatometer of course cares not about this ambiguity and simply spits out a number, leading to the disconnect we see. You don’t wanna be the outlier if the film ends up a smash hit! The safe bet is to stay positive and appease.

The other thing that fascinates me is that, despite a near-20% drop-off between critics and audiences, the Average Rating for both is literally identical. So why the big dip in percentages? If the “average” critic and the “average” audience member both rate it around an 8.2/10, why do nearly 20% fewer audience members like the film? To me, this indicates a higher polarization among audience members. A higher proportion of fans rated it either really high (5 stars) or really low (1 star), because audience tastes tend to be more refined than critics. What do I mean by that? Let’s explore:

Genre Woes

One thing to remember about film critics is that most of them watch every kind of movie under the sun indiscriminately. With a few rare exceptions, the majority of the reviews you’ll see on Rotten Tomatoes come from people who enjoy a wide berth of films every year, and who probably have their own likes and dislikes. If someone who prefers period dramas reviews an action film and doesn’t like it, does that mean fans of action won’t like it either? Not necessarily. The tragedy of Rotten Tomatoes is in the way it blends all movie fans together and presumes that all of their tastes are going to be the same.

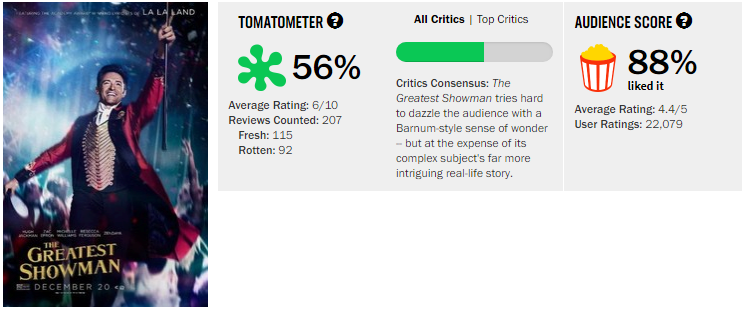

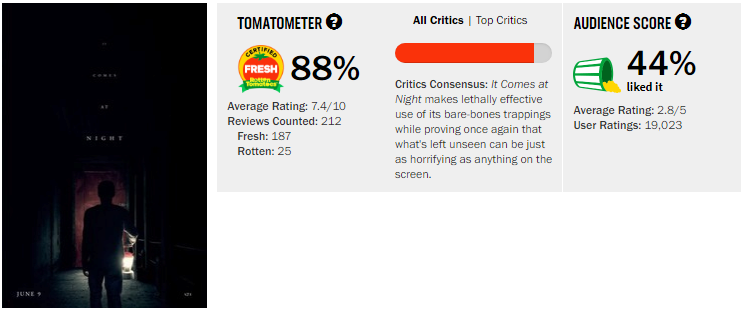

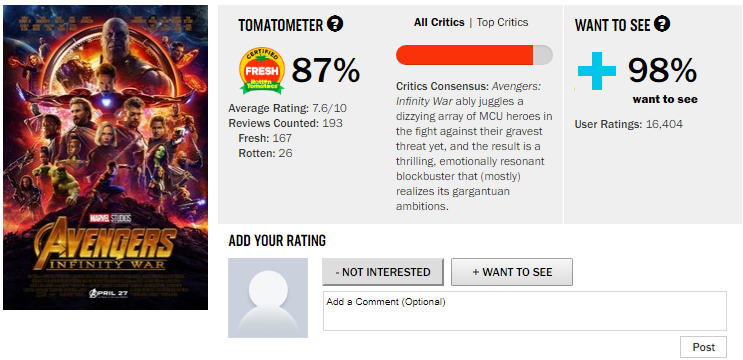

Heavy genre films tend to fare the worst when it comes to a divide between critics’ and audiences’ perception of a film. A couple examples from recent years:

Many critics felt that The Greatest Showman was a shallow, Oscar-baity musical that didn’t live up to the precedent set by the previous year’s La La Land, which is totally unfair considering this film went into production before that movie was even written! The film became a sleeper hit, finding a niche audience as die-hard fans of the musical genre as well as families came out in droves to see Hugh Jackman sing and dance. Whatever script issues or accusations of shallowness that critics leveled against the film didn’t matter to the types of people that wanted to see it, as a result of people mis-applying the same rubric of standards to the film as other high-minded works when it clearly isn’t aiming for that.

It Comes At Night was a massive letdown for audiences, largely because it was marketed as a horror film when it was really more of a psychological drama. Critics praised the film for its bravery in blurring the lines between genres and experimenting with the form, but thrill-seeking audiences who weren’t expecting such a challenging piece came away feeling blueballed. (Note also the abnormally-low Average Rating for critics, indicating some amount of ambivalence among them as well.)

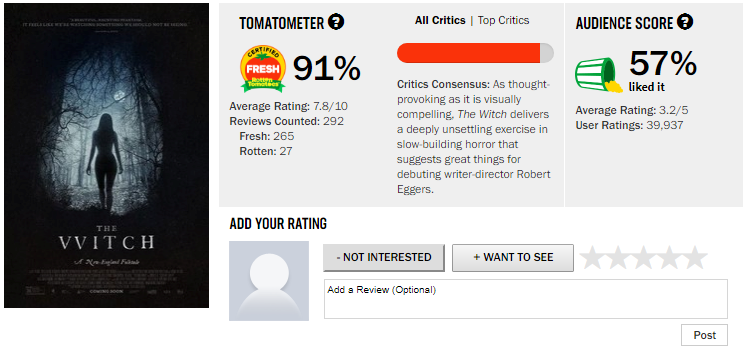

Similar to the previous example, The Witch was an unconventional and challenging horror film that critics lauded for its willingness to take risks. This film had a unique problem: it was hyped beyond belief prior to its release, with its Tomatometer score used in the marketing to get people excited to see the film. And as with It Comes At Night, audiences who weren’t expecting such high-minded, metaphorical fare didn’t get what they wanted, leading to a divisive reception. There is no indication on Rotten Tomatoes when a genre film breaks from tradition or offers something different than other films of its kind…not from a simple binary number, anyway.

The Tomatometer As A Marketing Tool

Often the public will accuse films of being “pretentious” if it fares well with critics but doesn’t work for audiences. Many regard the Oscars, for example, as an instance of Hollywood patting itself on the back and rewarding esoteric films that only they enjoy. I don’t generally find this to be the case because, as I said, film critics come in all shapes and sizes from all over the world, and when a film finds consensus among them it doesn’t indicate elitism. Yet films that end up doing well on the awards circuit almost universally score lower with audiences.

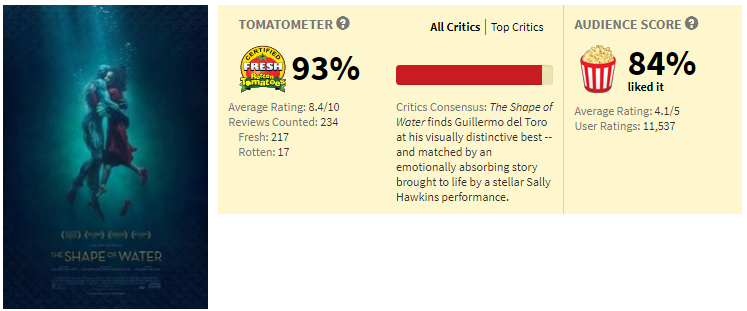

Could it simply be that audiences are affected by Hollywood’s praise of a film, use that as justification to see it, then come away disappointed? As an example, let’s look at last year’s Best Picture winner, The Shape of Water. Here is a snapshot taken of the film’s Rotten Tomatoes page on 12/28/17, three days after release:

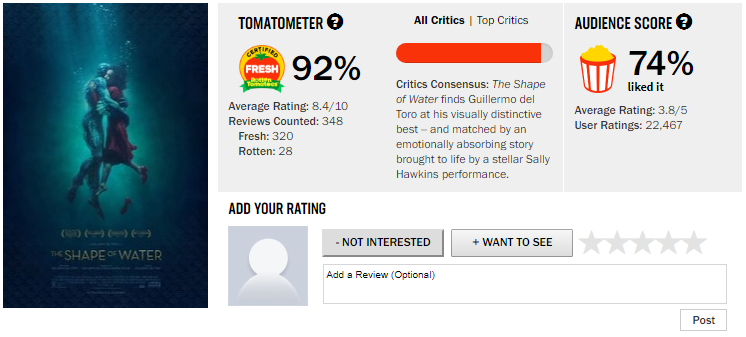

Sure, there’s a bit of a dropoff from critics to audiences, but an awful lot of people still liked the film. How about four months later, after the film went on its awards season tear and came away the ultimate victor?

A massive 10% drop in audience opinion! What gives?

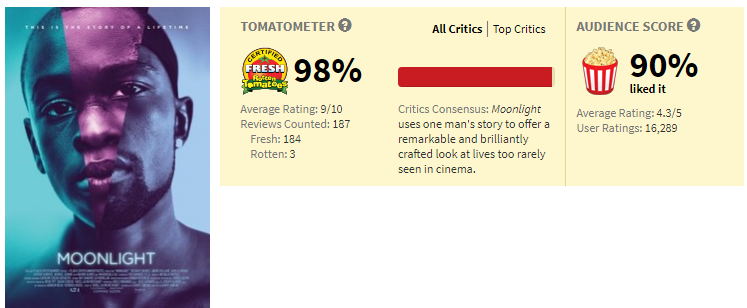

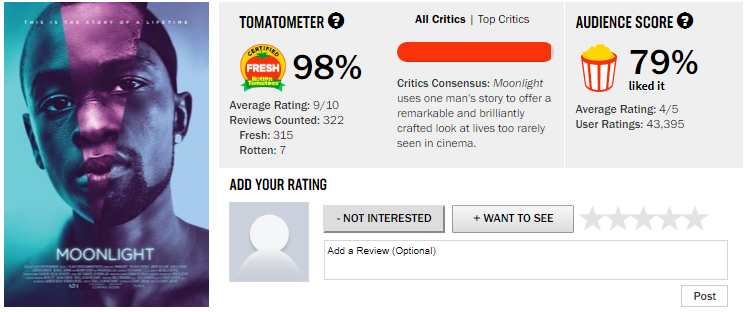

The exact same phenomenon occurred for the previous year’s big Oscar darling, Moonlight. Consider two snapshots, one taken in December 2016 shortly after release and the other taken today:

Now we come back to the topic of brigading. I tend to see the best in people and assume that things like online ratings are done with honest intentions. It would be easy to dismiss this phenomenon as salty anti-Hollywood voters conspiring to tank the rating out of spite, but I find that just as disingenuous as writing off Hollywood as “pretentious”. Therefore, when I see such a drop like this, I assume there was some major shift in the type of audience going to see these films.

These two films are very niche, appealing to a pretty narrow subset of viewers. Early on in these their runs, the only people going to see them are those who would be so inclined to see a Del Toro monster flick or a gay black drama. But once these films started racking up the gold, more people were exposed to these films’ existence, assumed based on their high Tomatometer scores that they would enjoy them, and discovered that they didn’t. Meanwhile you’ll note that the critics’ score barely moves, despite a fairly proportional number of professional critics discovering the film in the meantime.

The Tomatometer functions similarly to an Academy Award: a concrete manifestation of a film’s worth despite the gray area they usually exist in. Audiences these days are picky, not going to see every single movie that is released, but an award nomination or a high TomatoMeter score can indicate to them that a film has come around that’s worth seeing. And if it doesn’t match their sensibilities, a disconnect is born. So when people accuse Hollywood of elitism because the films they nominate aren’t widely-loved, perhaps it’s a self-fulfilling prophecy: the higher a film’s acclaim, the more likely people will come away disappointed due to higher expectations.

Conclusion

The Tomatometer is a useful tool to be sure, but it seems to have outgrown its utility. It has altered the way films are marketed, with films often having to choose between appealing to a wide audience or only to a small subset of dedicated fans. Because of the importance now assigned to the overall critics’ score, so much nuance is lost in the shuffle as actual thoughts are discarded in favor of a single, easily-referenced number. And that really seems to be the crux of the issue: audiences nowadays just look at the TomatoMeter score without thinking about what critics are actually saying about the films. It’s pointed to as a selling point for a film, when it really should just be a jumping-off point to start the conversation.

So what does this mean for the future? Well, whenever I see a film with a fairly high critics score but a lower average, or an ambivalent conversation surrounding a film, or a massively-hyped extravaganza with unrealistic expectations, I start to get suspicious that audiences won’t love it as much as they think. But what upcoming film could possibly fall under all these different umbrellas…?

-AD